The Downside of Vertical Scaling GPU Instances

The Pitfalls of Vertical Scaling in GPU-Intensive Workloads

The artificial intelligence, machine learning, and generative AI application's growth have swelled the demand for high-performance GPU workloads. To fulfill these needs, cloud services have introduced a broad range of instances to fulfill diverse needs. For many vertical scaling has been an quick fix to increase computational power by replacing a smaller instance with a more powerful one. However, this methodology might be less effective, or even pointless for GPU-intensive workloads.

Understanding GPU Workloads

GPUs are designed for high-speed mathematical calculations. These specialized computational capabilities make them a good choice or for some applications the only option for handling large dataset mathematical operations in machine learning (ML) and video editing. CPUs primary aim is to perform sequential task execution, whereas GPUs excel as the workhorses when it comes to deep learning, 3D rendering, and scientific computations.

The Basics of GPU Scaling

Instances can be scaled vertically, horizontally or through a combination of both as explained here. The Vertical scaling strategy means upgrading to a larger server with more computational power, such as CPU, memory, and GPU. The Horizontal scaling strategy involves adding more instances instead of upgrading the current one, hence increasing resources in parallel, very much like buying additional properties in the neighborhood to accommodate your growing family.

Limitations of Vertical Scaling for GPUs

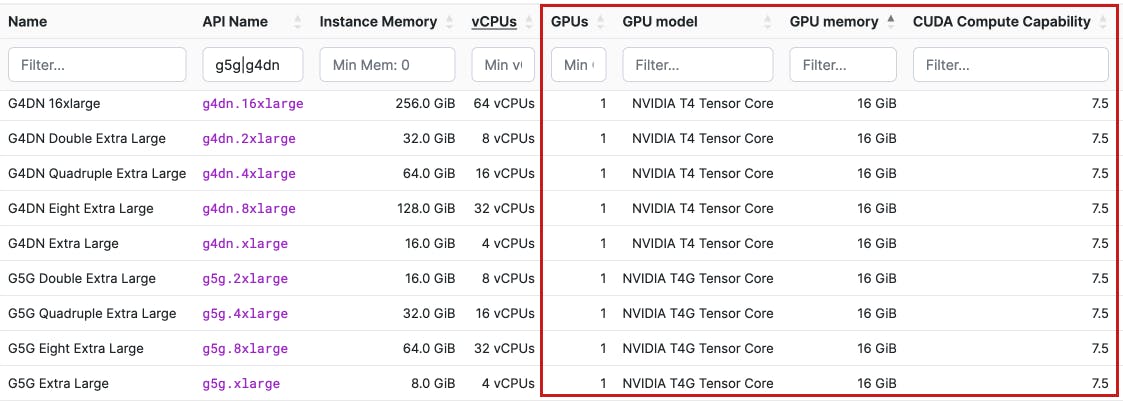

Vertical scaling's effectiveness is evident when we need more CPU or memory resources, but it hits a roadblock with GPU workloads. This is because upgrading to a larger instance does not necessarily increase the GPU's computational power. For example, the g5g.large and g4dn.large instances from AWS both house the Nvidia Tesla T4 and T4G respectively. Despite any vertical scaling between these instance types, the GPU power remains unchanged, as they contain the same number of GPU cores as shown in the following image. Hence vertical scaling will not address the actual bottleneck in GPU-intensive tasks.

Practical Implications

The implication is evident: when your application demands more GPU power, vertically scaling your instance will not deliver the required performance improvements. This inefficiency can lead to not just stagnant performance, but also increased costs, as you are paying for additional CPU and memory resources that your workload may never utilize.

An alternative to Vertical Scaling

When the bottleneck is GPU computational capabilities, horizontal scaling becomes a more viable solution. By adding more instances to your cloud infrastructure, you can achieve a near-linear increase in GPU capabilities. Horizontal scaling with right kind of servers will improve your infrastructure's resource utilization, thus offering a more cost-effective solution. Training an ML model with a vertically scaled server will add more CPU and memory but will not improve GPU power. Therefore, there will not be any improvement in training time. Shifting to horizontal scaling, with multiple GPU instances working in tandem, can significantly reduce the training time, showcasing the right approach to scaling for such tasks.

Conclusion

While vertical scaling offers a quick solution for prompt performance boost in certain scenarios, it falls short with GPU instances option available. I highly recommend checking out horizontal and vertical scaling for a deeper understanding of when to employ each strategy. A nuanced grasp of these scaling methods is crucial for optimizing performance and effective cost management in various computational scenarios. For tasks that are GPU-bound, adding more GPU power through horizontal scaling or re-architecting the solution is often the more effective path. Even for horizontal scaling, opt for the least powered instance, such as G5g.xlarge or G4dn.xlarge, to avoid paying for extra unused CPU power that is not required. Adopting the right scaling strategy, you can ensure that your infrastructure is not just robust but also cost-effective and performance-optimized for your specific computational needs.

More From the Author

Is AWS Deep Learning AMIs saving you time? A counterproductive approach hindering your progress! Learn About the Issues and Solution at How Your AWS Deep Learning AMI is Holding You Back

A Cost-Effective Creating Deep Learning AMIs deployment with Spot Instances: Navigate parallel frontier! Read the full article at: Deep Learning on AWS Graviton2 powered by Nvidia Tensor T4g

Fine-Tune FFmpeg for Optimal Performance with detailed compilation guide: Optimize your multimedia backend for optimal performance!. A comprehensive guide is available at Tailor FFmpeg for your needs.

GPU Acceleration for FFmpeg: A Step-By-Step Guide with Nvidia GPU on AWS! Check the complete guide at Enable Harware Accelaration with FFmpeg

CPU vs GPU Benchmark for video Transcoding on AWS: Debunking the CPU-GPU myth! See for yourself at Challenging the Video Encoding Cost-Speed Myth

Crafting the Team for Sustainable Success: Are "Rockstars" the Ultimate Solution for a Thriving Team? Explore the insights at Beyond Rockstars

How "Builders" Transform Team's Productivity: Navigating Vision & Ideas to Reality! Discover more about Builders at Bridging Dreams and Reality

Mental Well-being in Tech: Cultivating a Healthier Workplace for Tech Professionals Explore insights: The Dark Side of High-Tech Success!

Freelancer to Full-Time: Understanding Corporate Reluctance. Discover insights at Why Businesses Hesitate to Employ Freelancers

Transitioning from Freelancer to Full-Time: Hurdles to Overcome! Check out Why Businesses Hesitate to Employ Freelancers

Scaling the Cloud: Discover the Best Scaling Strategy for Your Cloud Infrastructure at Vertical and Horizontal Scaling Strategies

The Future of Efficient Backend Development: Efficient Backend Development with Outerbase! Discover the details at Building a Robust Backend with Outerbase

- GPU Acceleration for FFmpeg: A Step-By-Step Guide with Nvidia GPU on AWS! Check the complete guide at Enable Harware Accelaration with FFmpeg